Robots That Know Themselves: MIT’s Vision System Gives Machines Body Awareness

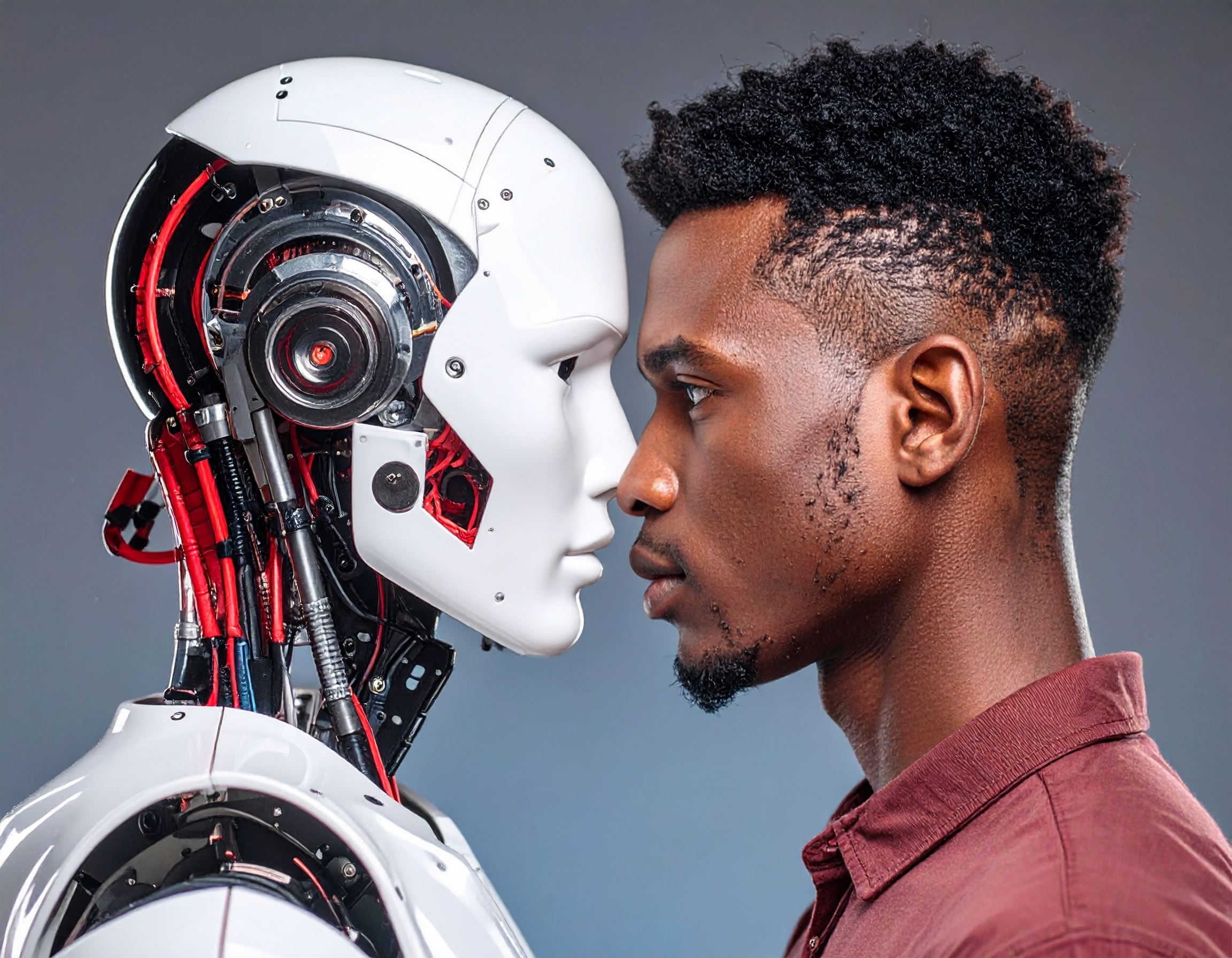

Teaching Robots to See Themselves

In July 2025, researchers from MIT introduced a groundbreaking system that allows robots to understand their own bodies using a new visual model. Instead of relying on sensors embedded in joints or limbs, this new method enables robots to see themselves and learn their movements through external cameras and an advanced AI model. The system, named V-SUIT (Visual Self Understanding via Imaging and Tracking), offers a less expensive and more adaptable alternative to traditional robotics training.

V-SUIT functions by creating a 3D model of the robot’s body based on visual observations. Once trained, the system allows a robot to mentally simulate its posture and motion—key to tasks like object manipulation, walking, or self-correction. The AI Employee behind this capability uses just standard RGB cameras and visual learning to build the robot’s sense of self.

Why This Matters for the Future of Non-Human Workers

The major breakthrough lies in the robot’s ability to form its own body representation without any physical contact sensors. This marks a step toward giving Non-Human Workers the kind of self-awareness that humans use to navigate space. With V-SUIT, robots can adapt to changes in body shape, wear and tear, or added components—all without needing to be reprogrammed from scratch.

The MIT team tested the system on both humanoid and quadruped robots. They showed that after just a short training period, the robots could track their body poses in real-time, even if they looked different or moved in unexpected ways. Such flexibility could help robots work in more dynamic environments, from homes to factories.

A Step Closer to Truly Autonomous Robots

This advancement in visual self-awareness is critical as robots move toward real-world autonomy. By empowering robots to understand their bodies through vision, engineers remove the need for bulky sensors or complex calibration, making robotic systems cheaper and easier to deploy. In practical terms, AI Employees could soon react to damage or adapt to tools in real-time, much like a human worker.

Looking ahead, this could transform industries where robots must coexist with people or other machines. A Digital Employee that can “see itself” may prove much safer, more intuitive, and better suited for collaborative tasks.

Key Highlights:

- What: Introduction of V-SUIT, a vision-based system to teach robots body awareness.

- Why it matters: Replaces physical sensors with visual learning, cutting costs and improving adaptability.

- How it works: Uses RGB cameras and a learning model to create a 3D self-image of the robot.

- Impact: Boosts autonomy and flexibility in AI Employees and Non-Human Workers.

Reference:

https://www.therobotreport.com/mit-vision-system-teaches-robots-to-understand-their-bodies/